Most AI models require internet access and powerful GPUs, but GPT-OSS changes the game.

With Ollama, you can install and run this large language model entirely offline, even on a laptop or Azure VM.

In this guide, I’ll walk you through setting it up, explain the benefits of running locally, and show you how to integrate it into your apps.

Take a look at the related video below.

Why Run GPT-OSS Locally?

- Data Governance: Keep sensitive data off external servers.

- Offline Access: deal for edge devices or environments without constant internet.

- Cost Control: Avoid API per-token costs; only invest in hardware once.

GPT-OSS Versions & Requirements

- 20B parameters: Runs on 16GB RAM; ideal for laptops.

- 120B parameters: Requires 64GB RAM and benefits from GPU acceleration.

- Note that the same installation process can be used for both versions.

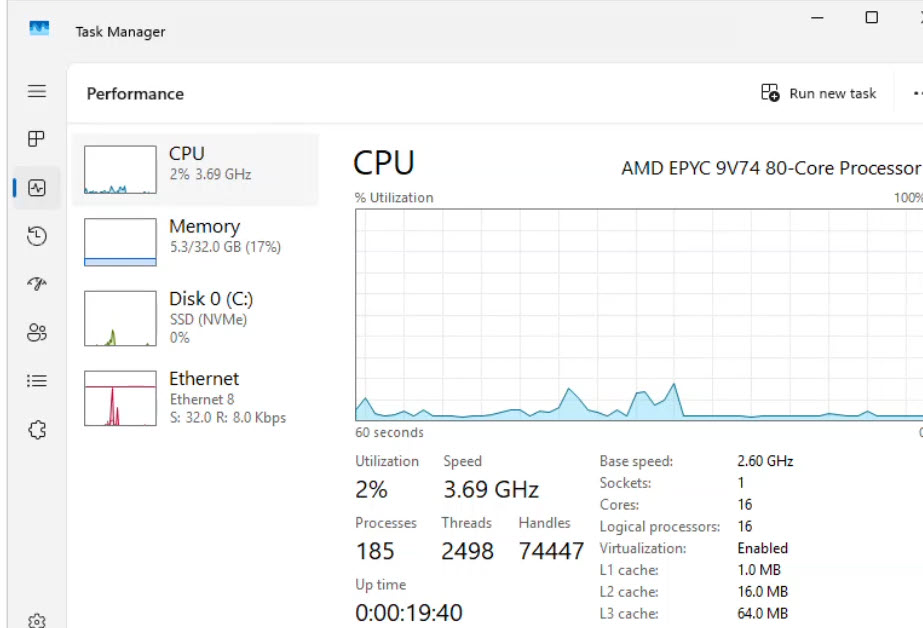

System Specs for Ollama

Installing Ollama

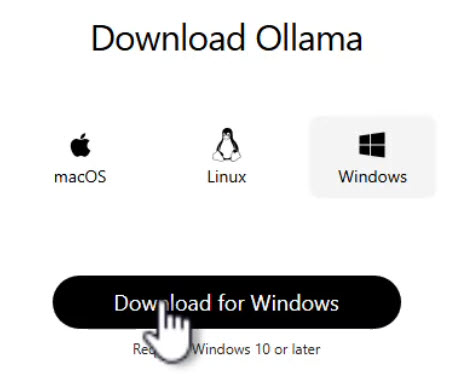

- Download Ollama from [Ollama.com](https://ollama.com).

- Install via the GUI (no command line needed).

- Set model storage location, adjust context length, and enable airplane mode for full offline operation.

Download Ollama

Downloading GPT-OSS in Ollama

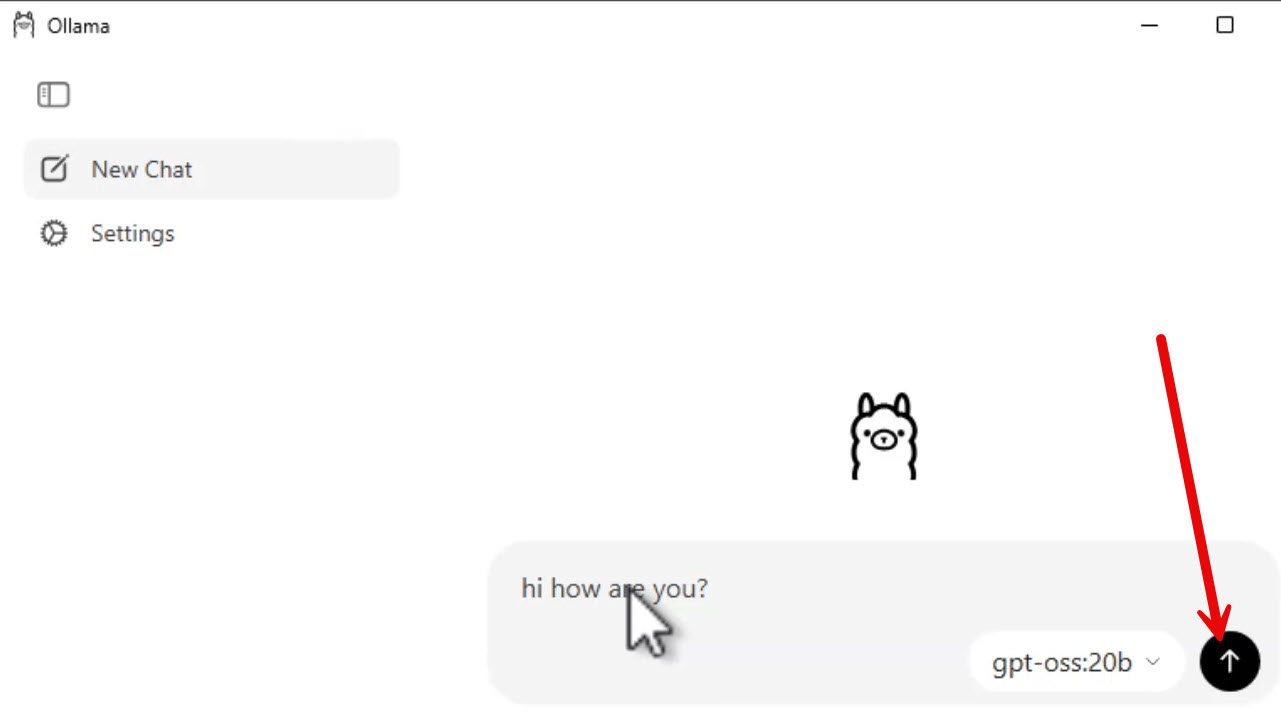

- Select GPT-OSS 20B (or 120B).

- Start downloading the model by submitting any prompt.

- Model sizes: about 13GB for 20B and about 65GB for 120B.

Start GPTOSS Download

Using the Model

- Test in Ollama’s chat interface.

- Watch memory usage in Task Manager.

- Shut down Ollama to release memory when done.

Calling GPT-OSS via API

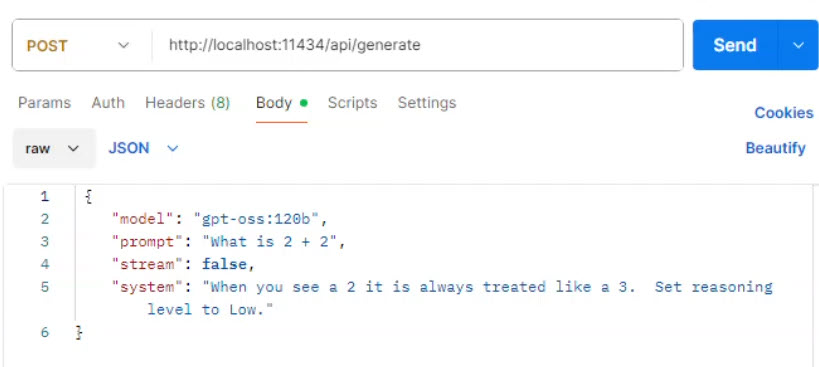

- Runs on `localhost:11434`.

- Two main endpoints: `/generate` for single requests, `/chat` for multi-turn conversations.

- Example: Use Postman to send custom system instructions and receive detailed reasoning output.

Calling Ollama API

Conclusion

With GPT-OSS and Ollama, running a powerful LLM offline is no longer just for enterprise AI labs. Whether for data privacy, offline use, or cost savings, this setup opens the door to fully local AI applications.

Try installing the 120B version if your hardware can handle it, and explore integrating GPT-OSS into your local applications.

Recent Comments